How I Obtained Started With Deepseek

페이지 정보

본문

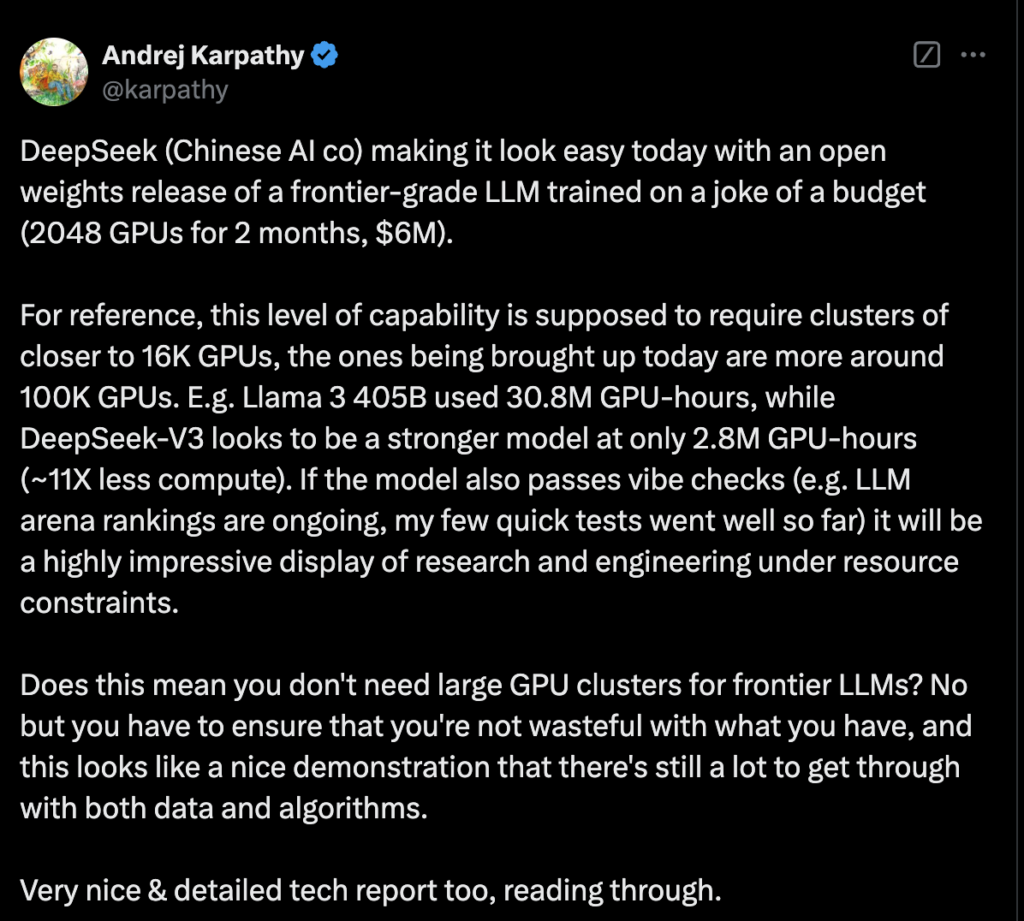

Despite its large measurement, DeepSeek v3 maintains efficient inference capabilities by way of innovative structure design. It options a Mixture-of-Experts (MoE) structure with 671 billion parameters, activating 37 billion for each token, enabling it to carry out a big selection of tasks with excessive proficiency. DeepSeek v3 represents the newest advancement in massive language models, featuring a groundbreaking Mixture-of-Experts architecture with 671B complete parameters. 671B total parameters for in depth knowledge illustration. This method permits DeepSeek V3 to achieve performance levels comparable to dense fashions with the identical number of total parameters, regardless of activating solely a fraction of them. Built on progressive Mixture-of-Experts (MoE) structure, DeepSeek v3 delivers state-of-the-artwork performance throughout varied benchmarks while sustaining environment friendly inference. Deepseek’s crushing benchmarks. It is best to undoubtedly check it out! The Qwen crew has been at this for some time and the Qwen models are used by actors within the West in addition to in China, suggesting that there’s a good chance these benchmarks are a real reflection of the efficiency of the models.

Despite its large measurement, DeepSeek v3 maintains efficient inference capabilities by way of innovative structure design. It options a Mixture-of-Experts (MoE) structure with 671 billion parameters, activating 37 billion for each token, enabling it to carry out a big selection of tasks with excessive proficiency. DeepSeek v3 represents the newest advancement in massive language models, featuring a groundbreaking Mixture-of-Experts architecture with 671B complete parameters. 671B total parameters for in depth knowledge illustration. This method permits DeepSeek V3 to achieve performance levels comparable to dense fashions with the identical number of total parameters, regardless of activating solely a fraction of them. Built on progressive Mixture-of-Experts (MoE) structure, DeepSeek v3 delivers state-of-the-artwork performance throughout varied benchmarks while sustaining environment friendly inference. Deepseek’s crushing benchmarks. It is best to undoubtedly check it out! The Qwen crew has been at this for some time and the Qwen models are used by actors within the West in addition to in China, suggesting that there’s a good chance these benchmarks are a real reflection of the efficiency of the models.

DeepSeek v3 incorporates superior Multi-Token Prediction for enhanced efficiency and inference acceleration. This not solely improves computational effectivity but in addition significantly reduces training costs and inference time. ✅ Model Parallelism: Spreads computation throughout multiple GPUs/TPUs for environment friendly coaching. One of many standout features of DeepSeek-R1 is its transparent and aggressive pricing mannequin. However, we don't have to rearrange experts since every GPU solely hosts one professional. Its superior algorithms are designed to adapt to evolving AI writing trends, making it probably the most reliable instruments accessible. Succeeding at this benchmark would present that an LLM can dynamically adapt its knowledge to handle evolving code APIs, moderately than being restricted to a fixed set of capabilities. Benchmark studies show that Deepseek's accuracy fee is 7% higher than GPT-four and 10% larger than LLaMA 2 in actual-world scenarios. As Reuters reported, some lab specialists consider DeepSeek's paper solely refers to the final coaching run for V3, not its entire improvement value (which can be a fraction of what tech giants have spent to construct competitive fashions). Founded in 2023 by a hedge fund supervisor, Liang Wenfeng, the corporate is headquartered in Hangzhou, China, and focuses on developing open-source massive language fashions.

DeepSeek v3 incorporates superior Multi-Token Prediction for enhanced efficiency and inference acceleration. This not solely improves computational effectivity but in addition significantly reduces training costs and inference time. ✅ Model Parallelism: Spreads computation throughout multiple GPUs/TPUs for environment friendly coaching. One of many standout features of DeepSeek-R1 is its transparent and aggressive pricing mannequin. However, we don't have to rearrange experts since every GPU solely hosts one professional. Its superior algorithms are designed to adapt to evolving AI writing trends, making it probably the most reliable instruments accessible. Succeeding at this benchmark would present that an LLM can dynamically adapt its knowledge to handle evolving code APIs, moderately than being restricted to a fixed set of capabilities. Benchmark studies show that Deepseek's accuracy fee is 7% higher than GPT-four and 10% larger than LLaMA 2 in actual-world scenarios. As Reuters reported, some lab specialists consider DeepSeek's paper solely refers to the final coaching run for V3, not its entire improvement value (which can be a fraction of what tech giants have spent to construct competitive fashions). Founded in 2023 by a hedge fund supervisor, Liang Wenfeng, the corporate is headquartered in Hangzhou, China, and focuses on developing open-source massive language fashions.

The company constructed a cheaper, aggressive chatbot with fewer excessive-end pc chips than U.S. Sault Ste. Marie city council is about to debate a potential ban on DeepSeek, a well-liked AI chatbot developed by a Chinese company. 5. They use an n-gram filter to get rid of check data from the train set. Contact Us: Get a customized consultation to see how DeepSeek can transform your workflow. AI will be an amazingly highly effective expertise that benefits humanity if used accurately. Meanwhile, momentum-based mostly methods can obtain the perfect model high quality in synchronous FL. Free DeepSeek v3 can handle endpoint creation, authentication, and even database queries, lowering the boilerplate code you want to write. ???? Its 671 billion parameters and multilingual help are impressive, and the open-supply approach makes it even higher for customization. It threatened the dominance of AI leaders like Nvidia and contributed to the largest drop in US inventory market historical past, with Nvidia alone shedding $600 billion in market value. Trained in simply two months using Nvidia H800 GPUs, with a remarkably efficient improvement cost of $5.5 million.

Transform your social media presence using DeepSeek Video Generator. Create engaging academic content with DeepSeek Video Generator. Create beautiful product demonstrations, model stories, and promotional content that captures consideration. Our AI video generator creates trending content material formats that keep your audience coming again for extra. Knowledge Distillation: Rather than training its mannequin from scratch, DeepSeek’s AI learned from present fashions, extracting and refining data to train quicker, cheaper and extra effectively. DeepSeek v3 makes use of a sophisticated MoE framework, allowing for an enormous mannequin capability whereas maintaining efficient computation. The model supports a 128K context window and delivers efficiency comparable to leading closed-source models whereas maintaining efficient inference capabilities. This revolutionary mannequin demonstrates distinctive performance across various benchmarks, together with mathematics, coding, and multilingual duties. Alibaba has up to date its ‘Qwen’ collection of fashions with a brand new open weight model called Qwen2.5-Coder that - on paper - rivals the efficiency of a few of the best models within the West.

- 이전글مغامرات حاجي بابا الإصفهاني/النص الكامل 25.03.02

- 다음글مغامرات حاجي بابا الإصفهاني/النص الكامل 25.03.02

댓글목록

등록된 댓글이 없습니다.