How does DeepSeek aI Detector Work?

페이지 정보

본문

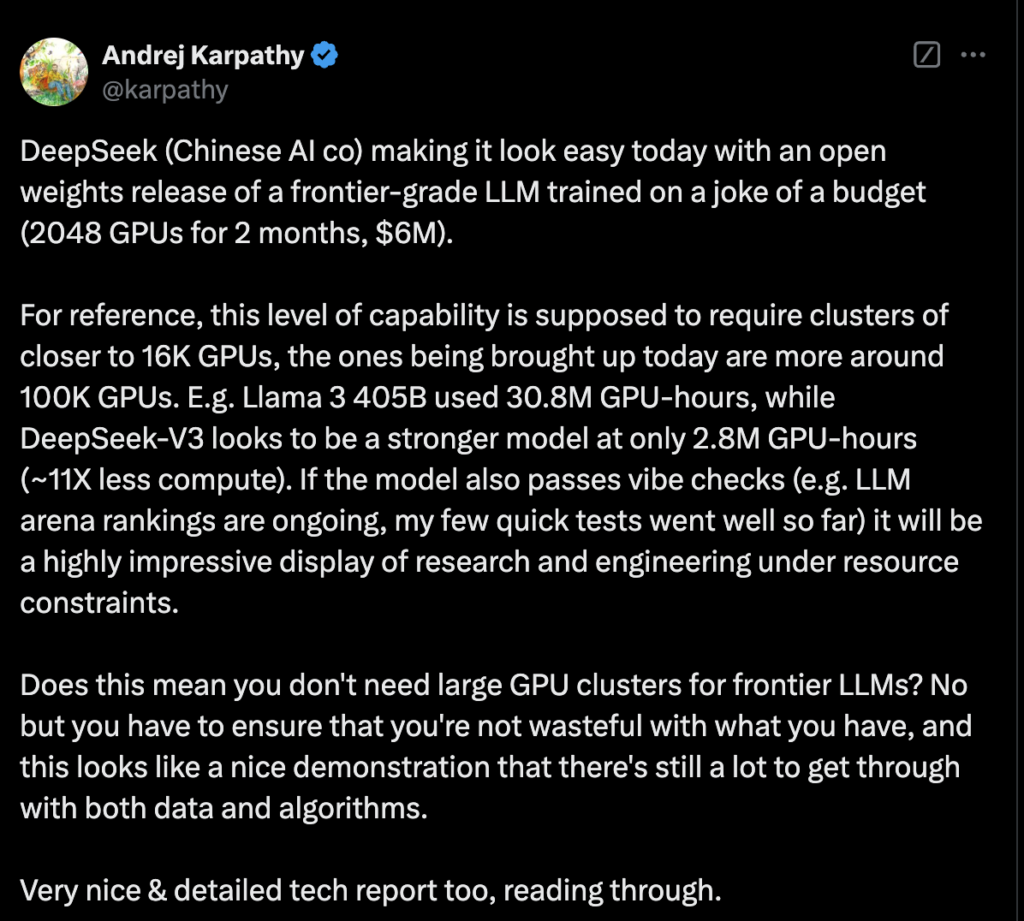

DeepSeek models which have been uncensored also show bias towards Chinese authorities viewpoints on controversial topics corresponding to Xi Jinping's human rights record and Taiwan's political status. DeepSeek's competitive efficiency at comparatively minimal cost has been acknowledged as potentially difficult the worldwide dominance of American AI fashions. This implies the mannequin can have extra parameters than it activates for each particular token, in a sense decoupling how much the mannequin knows from the arithmetic cost of processing individual tokens. If e.g. each subsequent token provides us a 15% relative discount in acceptance, it might be doable to squeeze out some more achieve from this speculative decoding setup by predicting a few more tokens out. The technology has many skeptics and opponents, but its advocates promise a vibrant future: AI will advance the global economic system into a brand new period, they argue, making work more efficient and opening up new capabilities across multiple industries that can pave the best way for brand new analysis and developments. Meta spent constructing its newest AI know-how. The Chinese startup, DeepSeek, unveiled a new AI mannequin last week that the corporate says is considerably cheaper to run than high alternatives from major US tech companies like OpenAI, Google, and Meta.

DeepSeek models which have been uncensored also show bias towards Chinese authorities viewpoints on controversial topics corresponding to Xi Jinping's human rights record and Taiwan's political status. DeepSeek's competitive efficiency at comparatively minimal cost has been acknowledged as potentially difficult the worldwide dominance of American AI fashions. This implies the mannequin can have extra parameters than it activates for each particular token, in a sense decoupling how much the mannequin knows from the arithmetic cost of processing individual tokens. If e.g. each subsequent token provides us a 15% relative discount in acceptance, it might be doable to squeeze out some more achieve from this speculative decoding setup by predicting a few more tokens out. The technology has many skeptics and opponents, but its advocates promise a vibrant future: AI will advance the global economic system into a brand new period, they argue, making work more efficient and opening up new capabilities across multiple industries that can pave the best way for brand new analysis and developments. Meta spent constructing its newest AI know-how. The Chinese startup, DeepSeek, unveiled a new AI mannequin last week that the corporate says is considerably cheaper to run than high alternatives from major US tech companies like OpenAI, Google, and Meta.

To see why, consider that any giant language mannequin seemingly has a small amount of knowledge that it uses so much, while it has so much of information that it makes use of somewhat infrequently. This was adopted by DeepSeek LLM, a 67B parameter mannequin geared toward competing with other massive language fashions. A preferred methodology for avoiding routing collapse is to drive "balanced routing", i.e. the property that every knowledgeable is activated roughly an equal number of occasions over a sufficiently large batch, by including to the coaching loss a time period measuring how imbalanced the expert routing was in a selected batch. However, if we don’t pressure balanced routing, we face the risk of routing collapse. Shared consultants are at all times routed to it doesn't matter what: they are excluded from each expert affinity calculations and any potential routing imbalance loss term. Many specialists fear that the federal government of China might use the AI system for international influence operations, spreading disinformation, surveillance and the development of cyberweapons. This causes gradient descent optimization methods to behave poorly in MoE training, often leading to "routing collapse", where the model will get caught at all times activating the identical few specialists for every token instead of spreading its data and computation around all the accessible consultants.

To flee this dilemma, DeepSeek separates consultants into two sorts: shared specialists and routed consultants. KL divergence is a standard "unit of distance" between two probabilistic distributions. Whether you’re searching for a fast summary of an article, assist with writing, or code debugging, the app works by using superior AI fashions to ship related ends in real time. It is a cry for assist. Once you see the strategy, it’s immediately apparent that it cannot be any worse than grouped-query attention and it’s also prone to be significantly better. Methods corresponding to grouped-question attention exploit the possibility of the identical overlap, but they accomplish that ineffectively by forcing attention heads that are grouped collectively to all respond similarly to queries. Exploiting the truth that completely different heads need entry to the identical info is essential for the mechanism of multi-head latent consideration. If we used low-rank compression on the important thing and value vectors of individual heads instead of all keys and values of all heads stacked together, the strategy would merely be equivalent to using a smaller head dimension to begin with and we might get no achieve. This training was carried out utilizing Supervised Fine-Tuning (SFT) and Reinforcement Learning. This not solely gives them an extra target to get signal from during coaching but also allows the mannequin to be used to speculatively decode itself.

If you beloved this short article and you would like to receive a lot more data regarding DeepSeek v3 kindly visit our web page.

- 이전글How To Understand Who Your Massage Therapy Clients Are - Hunting For A Target Market 25.03.07

- 다음글Nine Things That Your Parent Teach You About Adult Male ADHD Symptoms 25.03.07

댓글목록

등록된 댓글이 없습니다.