The Talk Over What Is Chatgpt

페이지 정보

본문

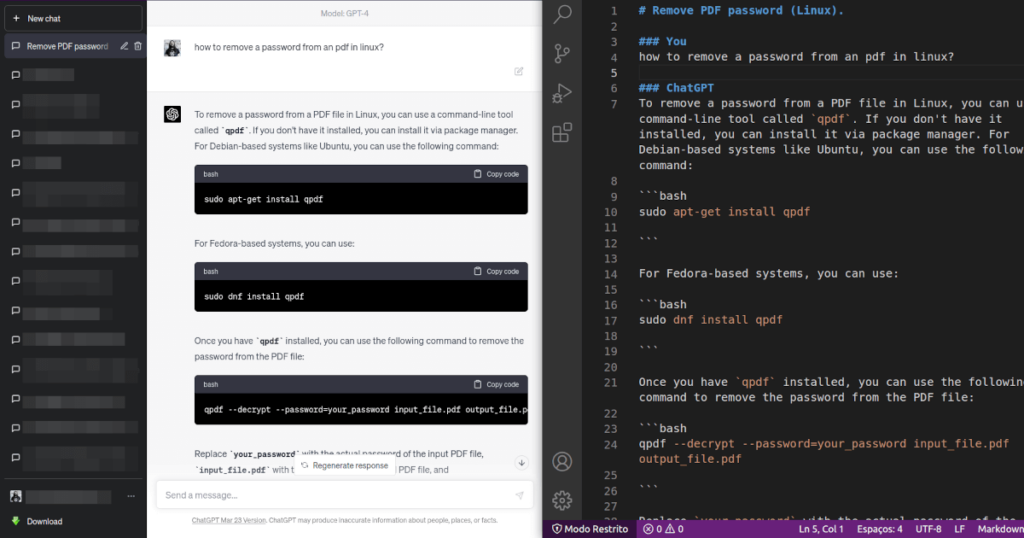

The upshot is that in just below a month, there have been three recorded incidences of employees leaking delicate info by way of ChatGPT. Employees in the Slack channel mentioned they have been capable of bypass that message by simply clicking on the "Acknowledge" tab. But Jeffrey Ding, an assistant professor at Georgetown University who studies China’s tech trade, says that concerns about censorship don't seem to have slowed the development of massive language fashions in China. The Paperclip Maximiser principle, coined by philosopher Nick Boström of the University of Oxford, is a hypothetical state of affairs where an AI system will create as many literal paperclips as doable. Though the tech is advancing so fast that possibly someone will work out a way to squeeze these models down enough that you can do it. A better method to scale can be multi-GPU, where each card accommodates part of the mannequin. And part 9 provides gratuitous, contradictory explanations that contradict the zero-probability answer it just gave. It can also now reply to photographs and answer questions round visible stimuli. This chapter showcased how chatgpt español sin registro can make it easier to in coding. Join at this time and assist form the way forward for Turnitin. If at this time's models nonetheless work on the identical normal principles as what I've seen in an AI class I took a very long time in the past, alerts often move through sigmoid capabilities to assist them converge towards 0/1 or whatever numerical range limits the model layer operates on, so extra decision would solely affect instances where rounding at higher precision would trigger sufficient nodes to snap the other method and have an effect on the output layer's consequence.

The upshot is that in just below a month, there have been three recorded incidences of employees leaking delicate info by way of ChatGPT. Employees in the Slack channel mentioned they have been capable of bypass that message by simply clicking on the "Acknowledge" tab. But Jeffrey Ding, an assistant professor at Georgetown University who studies China’s tech trade, says that concerns about censorship don't seem to have slowed the development of massive language fashions in China. The Paperclip Maximiser principle, coined by philosopher Nick Boström of the University of Oxford, is a hypothetical state of affairs where an AI system will create as many literal paperclips as doable. Though the tech is advancing so fast that possibly someone will work out a way to squeeze these models down enough that you can do it. A better method to scale can be multi-GPU, where each card accommodates part of the mannequin. And part 9 provides gratuitous, contradictory explanations that contradict the zero-probability answer it just gave. It can also now reply to photographs and answer questions round visible stimuli. This chapter showcased how chatgpt español sin registro can make it easier to in coding. Join at this time and assist form the way forward for Turnitin. If at this time's models nonetheless work on the identical normal principles as what I've seen in an AI class I took a very long time in the past, alerts often move through sigmoid capabilities to assist them converge towards 0/1 or whatever numerical range limits the model layer operates on, so extra decision would solely affect instances where rounding at higher precision would trigger sufficient nodes to snap the other method and have an effect on the output layer's consequence.

This is named a dataflow structure, and it's changing into a very popular solution to scale AI processing. I asked ChatGPT about this and it solely provides me pace of processing enter (eg enter length / tokens/sec). And greater than half of teachers surveyed reported using ChatGPT a minimum of once since its release. Despite concerns about whether or not college students are using ChatGPT to cheat on exams or as a shortcut to doing their coursework, a nationwide survey reveals students and teachers have quickly included the brand new technology into their everyday lives. Again, these are all preliminary outcomes, and the article text ought to make that very clear. Does CPU make a difference for Stable Diffusion? Given a 9900K was noticeably slower than the 12900K, it appears to be fairly CPU restricted, with a high dependence on single-threaded performance. Try as I'd, not less than below Windows I can't get efficiency to scale past about 25 tokens/s on the responses with llama-13b-4bit.

I'd begin reading up on tips to optimize PyTorch performance in Windows. Learn the way to transform web pages to Mobi format for easy reading on your Kindle or different e-readers. "A lot of autistic folks have grown up being instructed that they're aliens, or that they sound like robots, or there’s simply something unsuitable with them," Young says. The 8-bit and 4-bit are alleged to be virtually the identical quality, based on what I've learn. Considering PCIe 4.Zero x16 has a theoretical limit of 32 GB/s, you'd only have the ability to read in the opposite half of the model about 2.5 occasions per second. As information passes from the early layers of the mannequin to the latter portion, it is handed off to the second GPU. This isn’t a very correct description, and I’ve used anthropomorphizing words like "attention" and "focus" above, for which I apologise; the truth of the mannequin is a group of tokenization, embedding, encoding and decoding steps, passing giant arrays of numeric knowledge through a number of layers of functions, with weights which were optimized by way of the coaching process. A "token" is only a phrase, roughly (issues like components of a URL I feel additionally qualify as a "token" which is why it's not strictly a one to 1 equivalence).

It’s one of the ways we keep the lights on right here. Say you wish to know (as Galileo did back within the late 1500s) how lengthy it’s going to take a cannon ball dropped from each flooring of the Tower of Pisa to hit the ground. At the top of that article, you can see from the model historical past that it originated all the best way again in 2014. However, the most recent replace was only 1.5 months ago and it now contains both the RTX 4000 sequence and H100. For example, researchers have found that the best way you ask a query to ChatGPT significantly modifications its response, together with its effectiveness in rejecting malicious requests. When you will have a whole lot of inputs, most of the rounding noise ought to cancel itself out and never make a lot of a distinction. If we make a simplistic assumption that the entire network needs to be utilized for each token, and your model is simply too big to fit in GPU reminiscence (e.g. making an attempt to run a 24 GB model on a 12 GB GPU), you then may be left in a state of affairs of attempting to drag in the remaining 12 GB per iteration.

If you have any issues relating to where by and how to use chat gpt gratis gpt gratis es gratis [similar site], you can call us at the web site.

- 이전글The Hidden Thriller Behind What Is Chatgpt 25.01.28

- 다음글Three Life-Saving Tips on Free Chatgpt 25.01.28

댓글목록

등록된 댓글이 없습니다.