Four Horrible Mistakes To Avoid Once you (Do) Deepseek

페이지 정보

본문

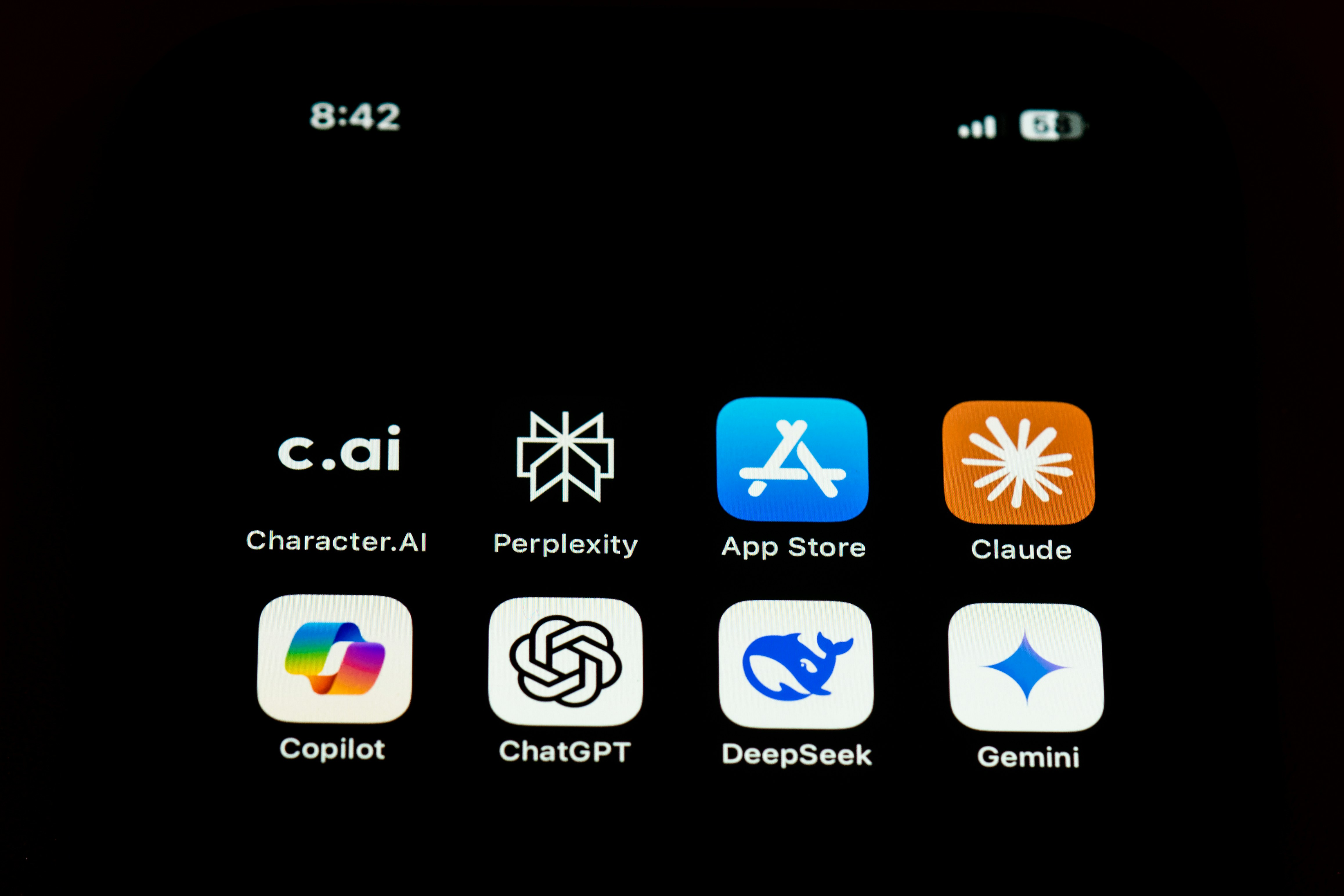

If you're a regular person and need to use DeepSeek Chat in its place to ChatGPT or other AI models, you may be able to make use of it without cost if it is offered by means of a platform that provides free access (such because the official DeepSeek Chat website or third-celebration purposes). Due to the performance of both the large 70B Llama 3 model as properly as the smaller and self-host-able 8B Llama 3, I’ve truly cancelled my ChatGPT subscription in favor of Open WebUI, a self-hostable ChatGPT-like UI that permits you to use Ollama and different AI suppliers while holding your chat historical past, prompts, and different information regionally on any computer you control. My earlier article went over find out how to get Open WebUI arrange with Ollama and Llama 3, nevertheless this isn’t the only approach I reap the benefits of Open WebUI. DeepSeek’s use of artificial information isn’t revolutionary, both, although it does present that it’s attainable for AI labs to create something helpful without robbing the complete web. That is how I used to be in a position to use and consider Llama 3 as my substitute for ChatGPT! When it comes to chatting to the chatbot, it's precisely the identical as using ChatGPT - you simply type one thing into the immediate bar, like "Tell me concerning the Stoics" and you will get an answer, which you'll then broaden with observe-up prompts, like "Explain that to me like I'm a 6-yr outdated".

Using GroqCloud with Open WebUI is feasible due to an OpenAI-suitable API that Groq offers. With the flexibility to seamlessly combine a number of APIs, together with OpenAI, Groq Cloud, and Cloudflare Workers AI, I've been capable of unlock the full potential of those highly effective AI models. Groq is an AI hardware and infrastructure company that’s growing their very own hardware LLM chip (which they call an LPU). Constellation Energy (CEG), the corporate behind the planned revival of the Three Mile Island nuclear plant for powering AI, fell 21% Monday. DeepSeek grabbed headlines in late January with its R1 AI mannequin, which the corporate says can roughly match the efficiency of Open AI’s o1 mannequin at a fraction of the fee. Cost Considerations: Priced at $3 per million input tokens and $15 per million output tokens, which is higher in comparison with Deepseek Online chat online-V3. "Reinforcement studying is notoriously tough, and small implementation variations can lead to major performance gaps," says Elie Bakouch, an AI research engineer at HuggingFace. Inspired by the promising results of DeepSeek-R1-Zero, two natural questions come up: 1) Can reasoning efficiency be further improved or convergence accelerated by incorporating a small quantity of excessive-high quality knowledge as a cold begin? A natural question arises concerning the acceptance price of the additionally predicted token.

Currently Llama three 8B is the biggest model supported, and they have token era limits much smaller than a few of the models out there. DeepSeek’s dedication to open-source fashions is democratizing entry to advanced AI technologies, enabling a broader spectrum of users, together with smaller businesses, researchers and developers, to engage with reducing-edge AI tools. As more capabilities and instruments go online, organizations are required to prioritize interoperability as they appear to leverage the newest developments in the field and discontinue outdated instruments. The CodeUpdateArena benchmark represents an essential step forward in assessing the capabilities of LLMs within the code era area, and the insights from this analysis may help drive the event of more robust and adaptable models that can keep pace with the quickly evolving software program landscape. This paper presents a brand new benchmark known as CodeUpdateArena to guage how effectively large language fashions (LLMs) can replace their information about evolving code APIs, a critical limitation of present approaches. This mannequin is designed to course of massive volumes of data, uncover hidden patterns, and supply actionable insights. Although Llama 3 70B (and even the smaller 8B model) is good enough for 99% of people and tasks, typically you just want the perfect, so I like having the choice either to just shortly reply my query and even use it along aspect different LLMs to quickly get choices for an answer.

- 이전글DeepSeek: a Breakthrough in aI for Math (and every Little Thing Else) 25.03.22

- 다음글мытье окон цена 25.03.22

댓글목록

등록된 댓글이 없습니다.