Is this Deepseek Chatgpt Thing Actually That arduous

페이지 정보

본문

Moreover, to further scale back reminiscence and communication overhead in MoE training, we cache and dispatch activations in FP8, whereas storing low-precision optimizer states in BF16. With a minor overhead, this strategy considerably reduces memory requirements for storing activations. For DeepSeek-V3, the communication overhead launched by cross-node expert parallelism ends in an inefficient computation-to-communication ratio of approximately 1:1. To deal with this challenge, we design an innovative pipeline parallelism algorithm known as DualPipe, which not solely accelerates model training by successfully overlapping ahead and backward computation-communication phases, but in addition reduces the pipeline bubbles. DeepSeek v3-V3 exemplifies the power of innovation and strategic design in generative AI. The training of DeepSeek-V3 is supported by the HAI-LLM framework, an efficient and lightweight coaching framework crafted by our engineers from the ground up. Under this constraint, our MoE training framework can almost achieve full computation-communication overlap. Because of the effective load balancing strategy, DeepSeek-V3 retains a very good load steadiness during its full training.

Moreover, to further scale back reminiscence and communication overhead in MoE training, we cache and dispatch activations in FP8, whereas storing low-precision optimizer states in BF16. With a minor overhead, this strategy considerably reduces memory requirements for storing activations. For DeepSeek-V3, the communication overhead launched by cross-node expert parallelism ends in an inefficient computation-to-communication ratio of approximately 1:1. To deal with this challenge, we design an innovative pipeline parallelism algorithm known as DualPipe, which not solely accelerates model training by successfully overlapping ahead and backward computation-communication phases, but in addition reduces the pipeline bubbles. DeepSeek v3-V3 exemplifies the power of innovation and strategic design in generative AI. The training of DeepSeek-V3 is supported by the HAI-LLM framework, an efficient and lightweight coaching framework crafted by our engineers from the ground up. Under this constraint, our MoE training framework can almost achieve full computation-communication overlap. Because of the effective load balancing strategy, DeepSeek-V3 retains a very good load steadiness during its full training.

DeepSeek-V3 is skilled on a cluster outfitted with 2048 NVIDIA H800 GPUs. And it has been working with AI companies, together with DeepSeek, to adapt fashions educated on Nvidia GPUs to run inference on its Ascend chips. He stated the the constraints on US chips out there in China meant companies resembling DeepSeek had been pushed into the nook resulting in innovating each from an engineering and algorithm perspective. China. Macron hopes to make room for others, together with French startup Mistral, which additionally uses an open supply AI model. Facing ongoing U.S. export restrictions to China over know-how services and products, China has taken up the urgency resulting from scarcity to escalate its focus and expedite its growth efforts. Operating under restrictions from US semiconductor export controls, the Hangzhou-based firm has achieved what many thought improbable-building a competitive massive language model (LLM) at a fraction of the associated fee sometimes associated with such systems. DeepSeek-Coder-V2 expanded the capabilities of the unique coding model. For Yann LeCun, Meta’s chief AI scientist, DeepSeek is much less about China’s AI capabilities and more about the broader energy of open-source innovation. On the other hand, those that imagine Chinese development stems from the country’s potential to cultivate indigenous capabilities would see American know-how bans, sanctions, tariffs, and different barriers as accelerants, fairly than obstacles, to Chinese growth.

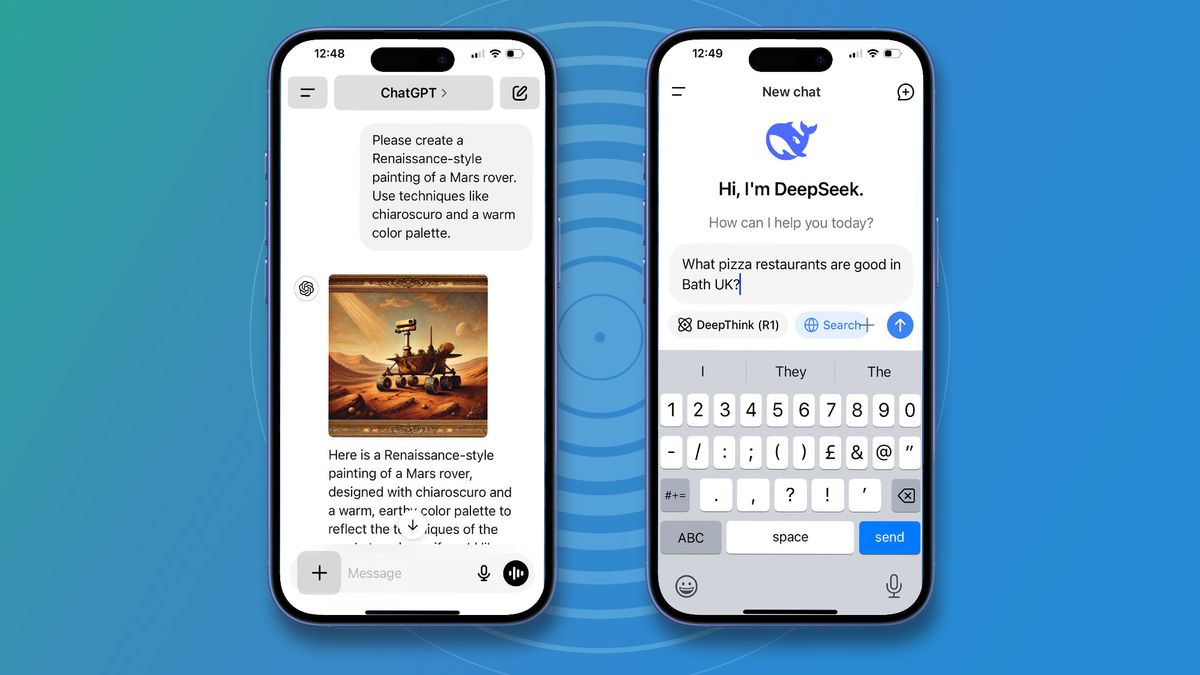

But I will play with it a bit extra and see if I can get it to a stage the place it's useful, even when it is simply useful for me. It'll inevitably take time before investors get a great grasp on just how concerning of a problem Free DeepSeek's AI development is or is not for the tech sector. Little identified earlier than January, the AI assistant launch has fueled optimism for AI innovation, challenging the dominance of US tech giants that rely on huge investments in chips, data centers and vitality. On the one hand, an MTP goal densifies the coaching indicators and should improve information effectivity. The US should still go on to command the sector, but there may be a sense that DeepSeek has shaken some of that swagger. OpenAI, the U.S.-primarily based firm behind ChatGPT, now claims DeepSeek might have improperly used its proprietary data to practice its mannequin, elevating questions about whether or not DeepSeek’s success was truly an engineering marvel.

That, nevertheless, prompted a crackdown on what Beijing deemed to be speculative buying and selling, so in 2023, Liang spun off his company’s research division into DeepSeek, a company targeted on advanced AI research. The corporate actively recruits young AI researchers from prime Chinese universities and uniquely hires people from exterior the computer science subject to boost its fashions' knowledge across varied domains. Through the dynamic adjustment, DeepSeek-V3 retains balanced expert load during coaching, and achieves better performance than models that encourage load steadiness by way of pure auxiliary losses. In addition, we additionally implement specific deployment methods to make sure inference load balance, so DeepSeek-V3 also doesn't drop tokens during inference. In addition, even in more common situations with no heavy communication burden, DualPipe nonetheless exhibits effectivity benefits. As well as, each dispatching and combining kernels overlap with the computation stream, so we also consider their affect on other SM computation kernels. So as to ensure enough computational performance for DualPipe, we customise environment friendly cross-node all-to-all communication kernels (including dispatching and combining) to conserve the number of SMs devoted to communication. Just like the machine-restricted routing used by DeepSeek-V2, DeepSeek-V3 also makes use of a restricted routing mechanism to restrict communication costs during training.

If you liked this article and you simply would like to receive more info pertaining to deepseek français generously visit the website.

- 이전글Most Military Persons Attemptedto Be Good Americans In Vietnam 25.03.07

- 다음글17 Signs To Know If You Work With German Shepherd Buy Puppy 25.03.07

댓글목록

등록된 댓글이 없습니다.